(Previous entry)

This post continues my discussion of the arguments in Nicholas Carr’s recent book The Glass Cage. The book is an extended critique of the trend towards automation. In the previous post, I introduced some of the key concepts needed to understand this critique. As I noted then, automation arises whenever a machine (broadly understood) takes over a task or function that used to be performed by a human (or non-human animal). Automation usually takes place within an intelligence ‘loop’. In other words, the machines take over from the traditional components of human intelligence: (i) sensing; (ii) processing; (iii) acting and (iv) learning. Machines can take over some of these components or all of them; humans can be fully replaced or they can share some functions with machines.

This means that automation is a complex phenomenon. There are many different varieties of automation, and they each have a unique set of properties. We should show some sensitivity to those complexities in our discussion. This makes broad-brush critiques pretty difficult. In the previous post I discussed Carr’s claim that automation leads to bad outcomes. Oftentimes the goal behind automation is to improve the safety and efficiency of certain processes. But this goal is frequently missed due to automation complacency and automation bias. Or so the argument went. I expressed some doubts about its strength toward the end of the previous post.

In this post, I want to look at another one of Carr’s arguments, perhaps the central argument in his book: the degeneration argument. According to this argument, we should not just worry about the effects of automation on outcomes; we should worry about its effects on the people who have to work with or rely upon automated systems. Specifically, we should worry about its effects on the quality of human cognition. It could be that automation is making us stupider. This seems like something worth worrying about.

Let’s see how Carr defends this argument.

1. The Whitehead Argument and the Benefits of Automation

To fully appreciate Carr’s argument, it is worth comparing it with an alternative argument, one that defends the contrary view. One such argument can be found in the work of Alfred North Whitehead. Alfred North Whitehead was a famous British philosopher and mathematician, probably best known for his collaboration with Bertrand Russell on Principia Mathematica. In his 1911 work, An Introduction to Mathematics, Whitehead made the following claim:

It is a profoundly erroneous truism, repeated by all copy-books and by eminent people when they are making speeches, that we should cultivate the habit of thinking of what we are doing. The precise opposite is the case. Civilization advances by extending the number of important operations which we can perform without thinking about them. Operations of thought are like cavalry charges in a battle — they are strictly limited in number, they require fresh horses, and must only be made at decisive moments.

Whitehead may not have had modern methods of automation in mind when he wrote this — though his work did help to inaugurate the computer age — but what he said can certainly be interpreted by reference to them. For it seems like Whitehead is advocating, in this quote, the automation of thought. It seems like he is saying that the less mental labour humans need to expend, the more ‘advanced’ civilization becomes.

But Carr thinks that this is a misreading, one that is exacerbated by the fact that most people only quote the line starting ‘civilization advances…’ and leave out the rest. If you look at the last line, the picture becomes more nuanced. Whitehead isn’t suggesting that automation is an unqualified good. He is suggesting that mental labour is difficult. We have a limited number of ‘cavalry charges’. We should not be expending that mental effort on trivial matters. We should be saving it for the ‘decisive moments’.

This, then, is Whitehead’s real argument: the automation of certain operations of thought is good because it frees us up to think the more important thoughts. To put it a little more formally (and in a way that Whitehead may not have fully endorsed but which suits the present discussion):

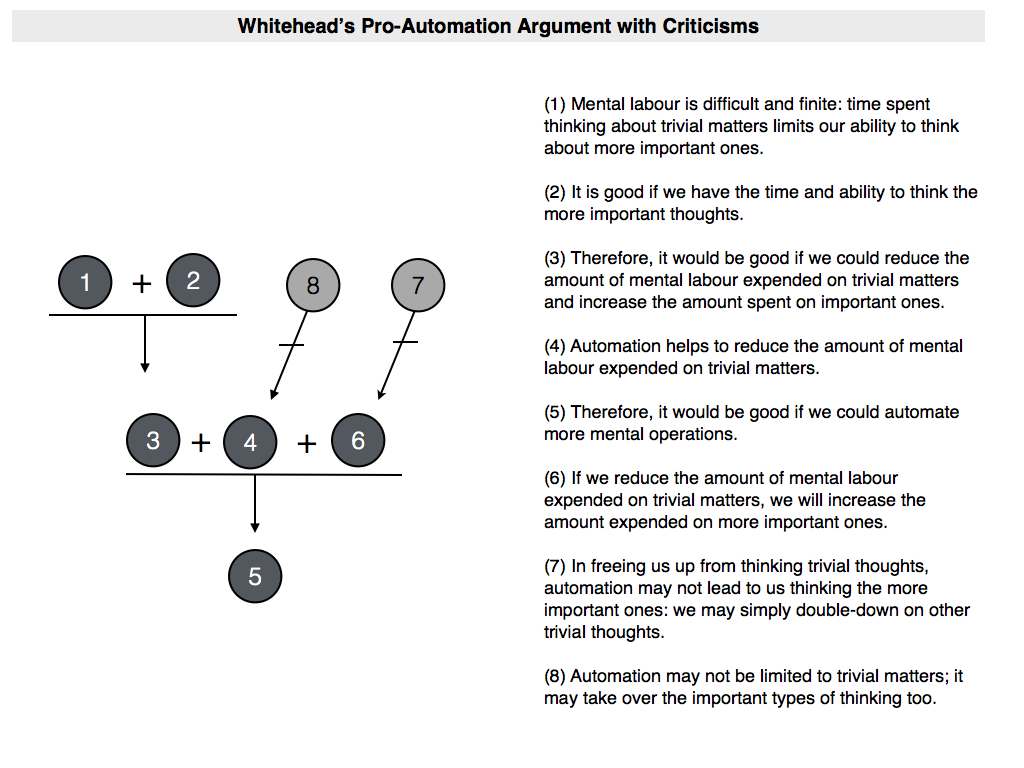

- (1) Mental labour is difficult and finite: time spent thinking about trivial matters limits our ability to think about more important ones.

- (2) It is good if we have the time and ability to think the more important thoughts.

- (3) Therefore, it would be good if we could reduce the amount of mental labour expended on trivial matters and increase the amount spent on important ones.

- (4) Automation helps to reduce the amount of mental labour expended on trivial matters.

- (5) Therefore, it would be good if we could automate more mental operations.

This argument contains the optimism that is often expressed in debates about automation and the human future. But is this optimism justified?

2. The Structural Flaws in the Whitehead Argument

Carr doesn’t think so. Although he never sets it out in formal terms, I believe that his reason for thinking this can be understood in light of the preceding version of Whitehead’s argument. Look again at premise (4) and the inference from that premise and premise (3) to (5). Do you think this forms a sound argument? You shouldn’t. There are at least two problems with it.

In the first place, it seems to rely on the following implicit premise:

- (6) If we reduce the amount of mental labour expended on trivial matters, we will increase the amount expended on more important ones.

This premise — which is distinct from premise (1) — is needed if we wish to reach the conclusion. Without it, it does not follow. And once this implicit premise is made explicit, you begin to see where the problems might lie. For it could be that humans are simply lazy. That if you free them from thinking about trivial matters, they won’t expend the excess mental labour on thinking the hard thoughts. They’ll simply double down on other trivial matters.

The second problem is more straightforward, but again highlights a crucial assumption underlying the Whitehead argument. The problem is that premise (5) seems to be assuming that automation will always be focused on the more trivial thoughts, and that the machines will never be able to take away the higher forms of thinking and creativity. This assumption may also turn out to be false.

We have then two criticisms of the Whitehead argument. I’ll give them numbers and plug them into an argument map:

- (7) In freeing us up from thinking trivial thoughts, automation may not lead to us thinking the more important ones: we may simply double-down on other trivial thoughts.

- (8) Automation may not be limited to trivial matters; it may take over the important types of thinking too.

But this is to speak in fairly abstract terms. Are there any concrete reasons for thinking these implicit premises and underlying assumptions do actually count against the Whitehead argument? Carr thinks that there are. In particular, he thinks that there is some strong evidence from psychology suggesting that the rise of automation doesn’t simply free us up to think more important thoughts. On the contrary, he thinks that the evidence suggests that the creeping reliance on automation is degenerating the quality of our thinking.

3. The Degeneration Argument

Carr’s argument is quite straightforward. It starts with a discussion of the generation effect. This is something that was discovered by psychologists in the 1970s. The original experiments had to do with memorisation and recall. The basic idea is that the more cognitive work you have to do during the memorisation phase, the better able you are to recall the information at a future date. Suppose I gave you a list of contrasting words to remember:

HOT: COLD

TALL: SHORT

How would you go about doing it? Unless you have some familiarity with memorisation techniques (like the linking or loci methods), you’d probably just read through the list and start rehearsing it in your mind. This is a reasonably passive process. You absorb the words from the page and try to drill them into your brain through repetition. Now suppose I gave you the following list of incomplete word pairs, and then asked you to both (a) complete the pairs; and (b) memorise them:

HOT: C___

TALL: S___

This time the task requires more cognitive effort. You actually have to generate the matching pair in your mind before you can start trying to remember the list. In experiments, researchers have found that people who were forced to take this more effortful approach were significantly better at remembering the information at a later point in time. This is the generation effect in action. Although the original studies were limited to rote memorisation, later studies revealed that it has a much broader application. It helps with conceptual understanding, problem solving, and recall of more complex materials too. As Carr puts it, these experiments show us that ‘our mind[s] rewards us with greater understanding’ when we exert more focus and attention.

The generation effect has a corollary: the degeneration effect. If anything that forces us to use our own internal cognitive resources will enhance our memory and understanding, then anything that takes away the need to exert those internal resources will reduce our memory and understanding. This is what seems to be happening in relation to automation. Carr cites the experimental work of Christof van Nimegen in support of this view.

Van Nimegen has done work on the role of assistive software in conceptual problem solving. Some of you are probably familiar with the Missionaries and Cannibals game (a classic logic puzzle about getting a group of missionaries across a river without being eaten by a cannibal). The game comes with a basic set of rules and you must get the missionaries across the river in the least number of trips while conforming to those rules. Van Nimegen performed experiments contrasting two groups of problem solvers on this game. The first group worked with a simple software program that provided no assistance to those playing the game. The second group worked a software program that offered on-screen prompts, including details as to which moves were permissible.

The results were interesting. People using the assistive software could solve the puzzles and performed better at first thanks to the assistance. But they faded in the long-run. The second group emerged as the winners: they solved the puzzles more efficiently and with fewer wrong-moves. What’s more, in a follow up study performed 8 months later, it was found that members of the second group were better able to recall how to solve the puzzle. Van Nimegen went on to repeat that result in experiments involving different types of task. This suggests that automation can have a degenerating effect, at least when compared to traditional methods of problem-solving.

Carr suggests that other evidence confirms the degenerating effect of automation. He cites an example of a study done on accounting firms using assistive software, which found that human accountants relying on this software had a poorer understanding of risk. Likewise, he gives the (essentially anecdotal) example of software engineers relying on assistive programs to clear-up their dodgy first draft code. In the words of one Google software developer, Vivek Haldar, this has led to “Sharp tools, dull minds.”

Summarising all this, Carr seems to be making the following argument. This could be interpreted as an argument in support of premise (7), given above. But I prefer to view it as a separate counterargument because it also challenges some of the values underlying the Whitehead argument:

- (9) It is good if humans can think higher thoughts (i.e. have some complexity and depth of understanding).

- (10) In order to think higher thoughts, we need to engage our minds, i.e. use attention and focus to generate information from our own cognitive resources (this is the ‘generation effect’).

- (11) Automation inhibits our ability to think higher thoughts by reducing the need to engage our own minds (the ‘degeneration effect’).

- (12) Therefore, automation is bad: it reduces our ability to think higher thoughts.

4. Concluding Thoughts

What should we make of this argument? I am perhaps not best placed to critically engage with some aspects of it. In particular, I am not best placed to challenge its empirical foundation. I have located the studies mentioned by Carr and they all seem to support what he is saying, and I know of no competing studies, but I am not well-versed in the literature. For this reason, I just have to accept this aspect of the argument and move on.

Fortunately, there are two other critical comments I can make by way of conclusion. The first has to do with the implications of the degeneration effect. If we assume that the degeneration effect is real, it may not imply that we are generally unable to think higher thoughts. It could be that the degeneration is localised to the particular set of tasks that is being automated (e.g. solving the missionaries and cannibals game). And if so, this may not be a big deal. If those tasks are not particularly important, humans may still be freed up to think the more important thoughts. It is only if the effect is more widespread that a problem arises. And I don’t wish to deny that this could be the case. Automation systems are becoming more widespread and we now expect to rely upon them in many aspects of our lives. This could result in the spillover of the degeneration effect.

The other comment has to do with the value assumption embedded in premise (9) (which was also included in premise (2) of the Whitehead argument). There is some intuitive appeal to this. If anyone is going to be thinking important thoughts I would certainly like for that person to be me. Not just for the social rewards it may bring, but because there is something intrinsically valuable about the act of high-level thinking. Understanding and insight can be their own reward.

But there is an interesting paradox to contend with here. When it comes to the performance of most tasks, the art of learning involves transferring the performance from the conscious realm to the sub-conscious realm. Carr mentions the example of driving: most people know how difficult it is to learn how to drive. You have perform a sequence of smoothly coordinated and highly unnatural actions. This takes a great deal of cognitive effort, at first, but over time it becomes automatic. This process is well-documented in the psychological literature and is referred to as ‘automatization’. So, ironically, developing our own cognitive resources may simply result in further automation, albeit this time automation that is internal to us.

The assumption is that this internal form of automation is superior to the external form of automation that comes with outsourcing the task to a machine. But is this necessarily true? If the fear is that the externalisation causes us to lose something fundamental to ourselves, then maybe not. Could the external technology not simply form part of ‘ourselves’ (part of our minds)? Would externalisation not then be ethically on a par with internal automation? This is what some defenders of the external mind hypothesis like to claim, and I discuss the topic at greater length in another post. I direct the interested reader there for more.

That’s it for this post.